Capture and Analyze all the Things

You’ve probably been in a situation where you wanted to get some usable information from your web traffic. So, you do some Googlin’, learn where your Apache logs are stored, and try to make sense of it. Unfortunately, the logs are dense, repetitive, and don’t offer an easy way to correlate those lines into something you want. So, dissatisfied again, you do some more Googlin’ and come across a horde of paid services online and think to yourself: there’s got to be another way! Well, there is: GoAccess, and in this article I’m going to show you how to install it and start connecting the dots on your data all for the small sum of zero cost to you! Free is good right?

So, what is GoAccess? As pulled directly from the source:

GoAccess was designed to be a fast, terminal-based log analyzer. Its core idea is to quickly analyze and view web server statistics in real time without needing to use your browser (great if you want to do a quick analysis of your access log via SSH, or if you simply love working in the terminal).

It functions in two really powerful ways:

- Live analysis tool (Seriously, it refreshes on the fly)

- HTML or JSON export options

In this article, I will cover both in detail, in the shortest amount of time to get you up and running, fast! This tool is awesome. I was where you were five years ago. So, (shameless plug) I coded my own free and open source Apache log analyzer: Feather. Even still, I wanted to get more out of my data. I really wanted a graphical dashboard to get a snapshot of all the important metrics by month and year to look for ways to improve my online presence. So, I followed the process above that I opened with, and I too arrived at the thought: there has to be a better way. Then I found it, and it has since been fully integrated into my daily routine.

Installing GoAccess

In this demo I will use Fedora, but you can substitute the dnf commands with APT, Zypper, or pacman as you need. GoAccess is in all the popular repos.

sudo dnf install goaccess

…and that’s it. Now that we have it installed, let’s see how it serves as a “live analysis tool.” I am assuming you are using Apache and know the path to your logs. I use virtual aliases so I can host multiple sites on one server, so I setup my logs specifically for each domain and subdomain. Those logs are then parsed and pushed out using cron and goaccess commands in a shell script. In the following, I am assuming your log is stored in the default location and under the default name. With that said, let’s run the following command:

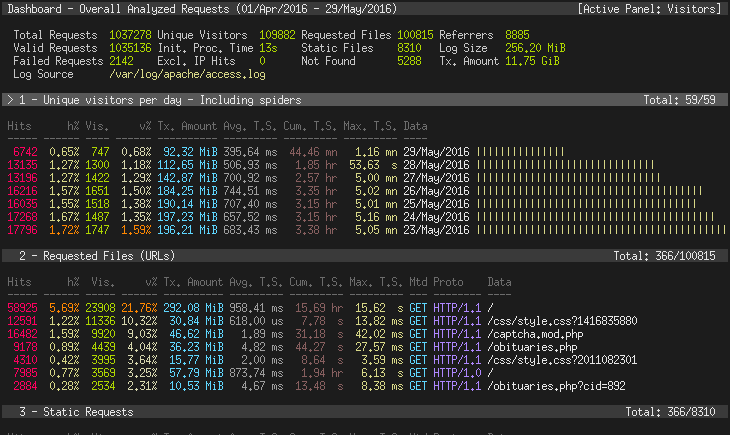

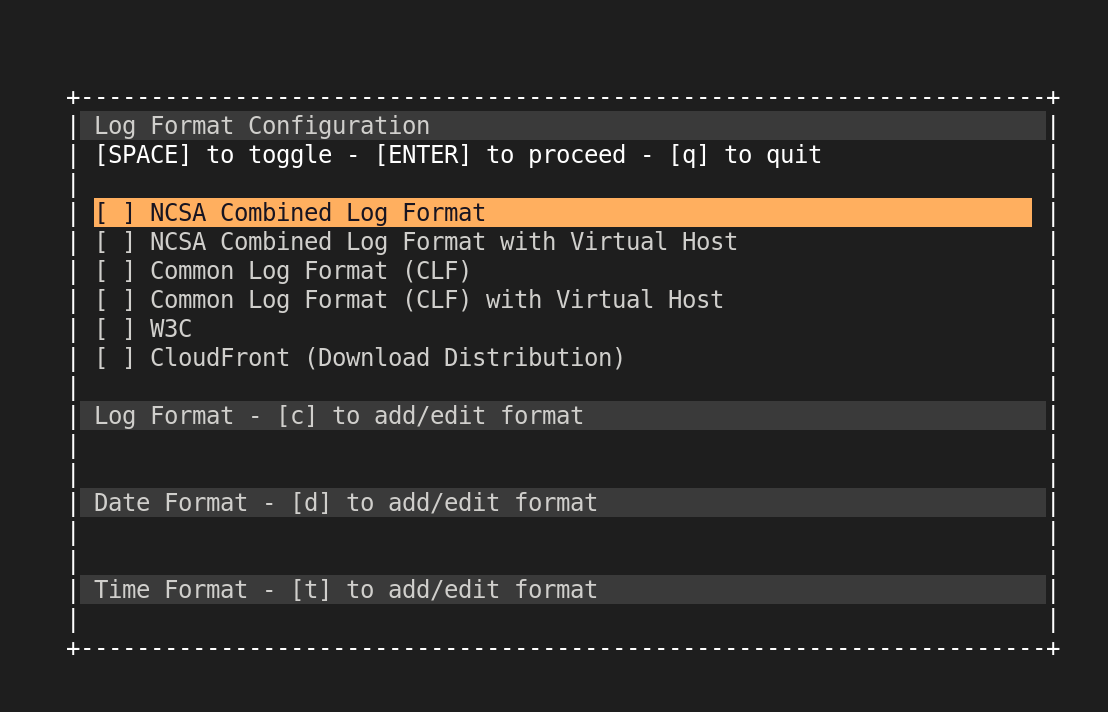

goaccess /var/log/httpd/access_log --ignore-crawlers --log-format=COMBINED

The COMBINED part could change depending on your default configuration. I’m not really sure what the default is on the latest distros. I have always just used the COMBINED format, since it generally captures more information. You can read all the tedious regex and other awesome stuff on the Apache website here: Apache Log Information. If you omit the –log-format option you will be presented with a selection, as shown below:

On your screen should be a cool, old school curses-like, text user interface, that is updating in real time. You can tab through each of the sections and hit enter to expand a section and browse through the records using the arrow keys and the ‘‘‘J’’’ and ‘‘‘K’’’ keys when the overflow starts to get hidden. By default, GoAccess provides you access to the following capture groups:

- Unique visitors per day

- Requested Files (URLs)

- Static Requests

- Not Found URLs (404s)

- Visitor Hostnames and IPs

- Operating Systems

- Browsers

- Time Distribution

- Referring Sites

- HTTP Status Codes

- Geo Location

That’s a lot of info right? That’s just the beginning. You can expand and sort in all kinds of ways if you’re looking for a quick look at your web traffic. If you run the following command:

goaccess --help

…you will be greeted with a long list of options.

LOG & DATE FORMAT OPTIONS

--log-format=<logformat> - Specify log format. Inner quotes need

escaping, or use single quotes.

--date-format=<dateformat> - Specify log date format. e.g., %d/%b/%Y

--time-format=<timeformat> - Specify log time format. e.g., %H:%M:%S

--datetime-format=<dt-format> - Specify log date and time format. e.g.,

%d/%b/%Y %H:%M:%S %z

USER INTERFACE OPTIONS

-c --config-dialog - Prompt log/date/time configuration window.

-i --hl-header - Color highlight active panel.

-m --with-mouse - Enable mouse support on main dashboard.

--color=<fg:bg[attrs, PANEL]> - Specify custom colors. See manpage for more

details.

--color-scheme=<1|2|3> - Schemes: 1 => Grey, 2 => Green, 3 =>

Monokai.

--html-custom-css=<path.css> - Specify a custom CSS file in the HTML

report.

--html-custom-js=<path.js> - Specify a custom JS file in the HTML

report.

--html-prefs=<json_obj> - Set default HTML report preferences.

--html-report-title=<title> - Set HTML report page title and header.

--html-refresh=<secs> - Refresh HTML report every X seconds (>=1 or

<=60).

--json-pretty-print - Format JSON output w/ tabs & newlines.

--max-items - Maximum number of items to show per panel.

See man page for limits.

--no-color - Disable colored output.

--no-column-names - Don't write column names in term output.

--no-csv-summary - Disable summary metrics on the CSV output.

--no-html-last-updated - Hide HTML last updated field.

--no-parsing-spinner - Disable progress metrics and parsing

spinner.

--no-progress - Disable progress metrics.

--no-tab-scroll - Disable scrolling through panels on TAB.

--tz=<timezone> - Use the specified timezone (canonical name,

e.g., America/Chicago).

SERVER OPTIONS

--addr=<addr> - Specify IP address to bind server to.

--unix-socket=<addr> - Specify UNIX-domain socket address to bind

server to.

--daemonize - Run as daemon (if --real-time-html

enabled).

--fifo-in=<path> - Path to read named pipe (FIFO).

--fifo-out=<path> - Path to write named pipe (FIFO).

--origin=<addr> - Ensure clients send this origin header upon

the WebSocket handshake.

--pid-file=<path> - Write PID to a file when --daemonize is

used.

--port=<port> - Specify the port to use.

--real-time-html - Enable real-time HTML output.

--ssl-cert=<cert.crt> - Path to TLS/SSL certificate.

--ssl-key=<priv.key> - Path to TLS/SSL private key.

--user-name=<username> - Run as the specified user.

--ws-url=<url> - URL to which the WebSocket server responds.

--ping-interval=<secs> - Enable WebSocket ping with specified

interval in seconds.

FILE OPTIONS

- - The log file to parse is read from stdin.

-f --log-file=<filename> - Path to input log file.

-l --debug-file=<filename> - Send all debug messages to the specified

file.

-p --config-file=<filename> - Custom configuration file.

-S --log-size=<number> - Specify the log size, useful when piping in

logs.

--external-assets - Output HTML assets to external JS/CSS files.

--invalid-requests=<filename> - Log invalid requests to the specified file.

--no-global-config - Don't load global configuration file.

--unknowns-log=<filename> - Log unknown browsers and OSs to the

specified file.

PARSE OPTIONS

-a --agent-list - Enable a list of user-agents by host.

-b --browsers-file=<path> - Use additional custom list of browsers.

-d --with-output-resolver - Enable IP resolver on HTML|JSON output.

-e --exclude-ip=<IP> - Exclude one or multiple IPv4/6. Allows IP

ranges. e.g., 192.168.0.1-192.168.0.10

-H --http-protocol=<yes|no> - Set/unset HTTP request protocol if found.

-M --http-method=<yes|no> - Set/unset HTTP request method if found.

-o --output=file.html|json|csv - Output either an HTML, JSON or a CSV file.

-q --no-query-string - Strip request's query string. This can

decrease memory consumption.

-r --no-term-resolver - Disable IP resolver on terminal output.

--444-as-404 - Treat non-standard status code 444 as 404.

--4xx-to-unique-count - Add 4xx client errors to the unique

visitors count.

--all-static-files - Include static files with a query string.

--anonymize-ip - Anonymize IP addresses before outputting to

report.

--anonymize-level=<1|2|3> - Anonymization levels: 1 => default, 2 =>

strong, 3 => pedantic.

--crawlers-only - Parse and display only crawlers.

--date-spec=<date|hr|min> - Date specificity. Possible values: `date`

(default), `hr` or `min`.

--db-path=<path> - Persist data to disk on exit to the given

path or /tmp as default.

--double-decode - Decode double-encoded values.

--enable-panel=<PANEL> - Enable parsing/displaying the given panel.

--fname-as-vhost=<regex> - Use log filename(s) as virtual host(s).

POSIX regex is passed to extract virtual

host.

--hide-referrer=<NEEDLE> - Hide a referrer but still count it. Wild

cards are allowed. i.e., *.bing.com

--hour-spec=<hr|min> - Hour specificity. Possible values: `hr`

(default) or `min` (tenth of a min).

--ignore-crawlers - Ignore crawlers.

--ignore-panel=<PANEL> - Ignore parsing/displaying the given panel.

--ignore-referrer=<NEEDLE> - Ignore a referrer from being counted. Wild

cards are allowed. i.e., *.bing.com

--ignore-statics=<req|panel> - Ignore static requests.

req => Ignore from valid requests.

panel => Ignore from valid requests and

panels.

--ignore-status=<CODE> - Ignore parsing the given status code.

--keep-last=<NDAYS> - Keep the last NDAYS in storage.

--no-ip-validation - Disable client IPv4/6 validation.

--no-strict-status - Disable HTTP status code validation.

--num-tests=<number> - Number of lines to test. >= 0 (10 default)

--persist - Persist data to disk on exit to the given

--db-path or to /tmp.

--process-and-exit - Parse log and exit without outputting data.

--real-os - Display real OS names. e.g, Windows XP,

Snow Leopard.

--restore - Restore data from disk from the given

--db-path or from /tmp.

--sort-panel=PANEL,METRIC,ORDER - Sort panel on initial load. e.g.,

--sort-panel=VISITORS,BY_HITS,ASC.

See manpage for a list of panels/fields.

--static-file=<extension> - Add static file extension. e.g.: .mp3.

Extensions are case sensitive.

--unknowns-as-crawlers - Classify unknown OS and browsers as crawlers.

GEOIP OPTIONS

--geoip-database=<path> - Specify path to GeoIP database file.

i.e., GeoLiteCity.dat, GeoIPv6.dat ...

OTHER OPTIONS

-h --help - This help.

-s --storage - Display current storage method. e.g., Hash.

-V --version - Display version information and exit.

--dcf - Display the path of the default config file

when `-p` is not used.

Examples can be found by running `man goaccess`.

For more details visit: https://goaccess.io/

GoAccess Copyright (C) 2009-2020 by Gerardo Orellana

Experimenting with the parsing options will often help you fine tune your result-set into a more manageable and useful one. As you may have noticed, I passed the --ignore-crawlers flag above. This is useful to weed out all the bot traffic we get bombarded with daily. If that doesn’t blow your mind… just wait, there’s more!

Exporting to HTML and JSON

Run the following command:

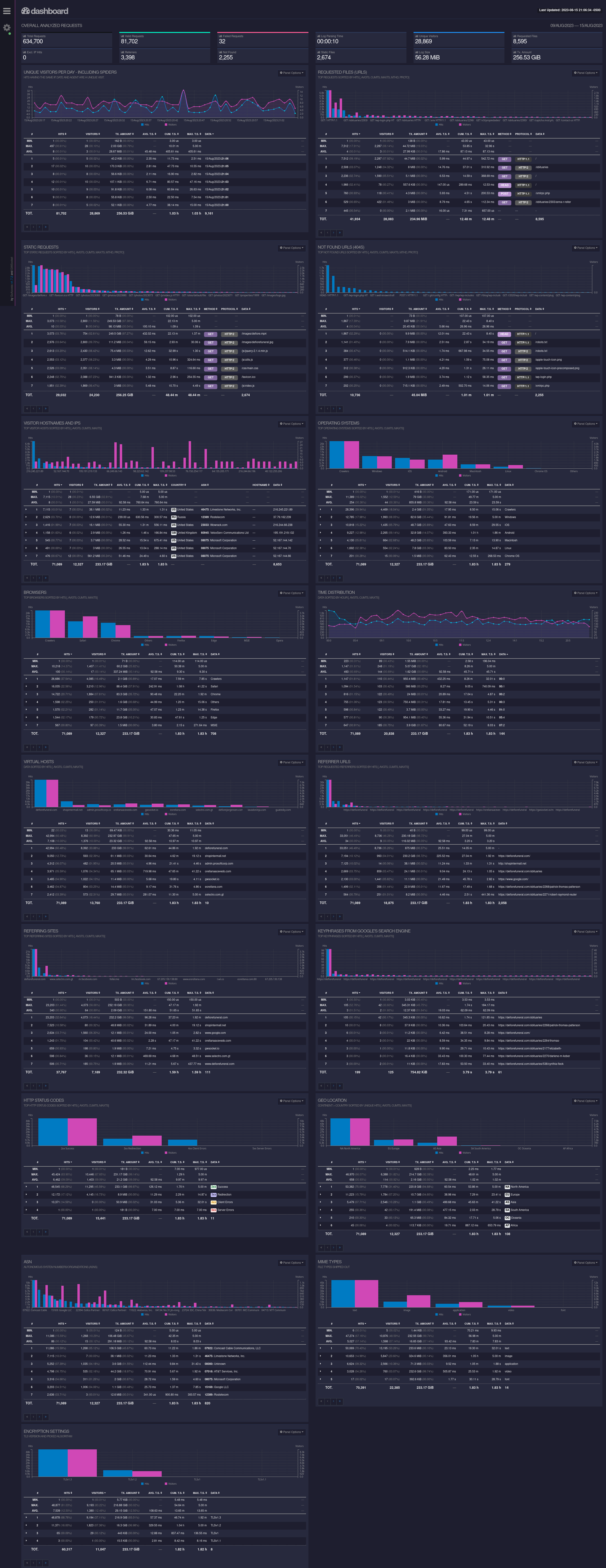

goaccess /var/log/httpd/access_log -o ~/mysite.html --ignore-crawlers --log-format=COMBINED

After you run it, you should now have a file in your home folder called: mysite.html. If you open this up in your favorite browser, you will instantly see the power of GoAccess.

All of those lost hours Googlin’ for an answer. All those hours … looking at paid plans that often reach hundreds of dollars a month … no more … you’ve found it! Even still, this is only the beginning of your journey!

As you start experimenting with the export options, you’ll see your data as static HTML (as we just did), live HTML (yes, you can do it!), and JSON effortlessly provide those long demanded answers! Again, for the low, low, price of zero dollars. You’re welcome! Your homework from here is to set up some cron jobs to run snapshots of your data (tagged by date time as appropriate) and then using that data to drive your online efforts. You can really do so much more with this tool.

If you’re an application developer, you can ingest these snapshots into your application as JSON to mold and shape or record as you see fit. The options are truly limitless. At the end of the day, you paid nothing to get access to a sharp tool that you can now put on the end of your spear to cut past those online SaaS companies and forge your own destiny. Good luck!